Research

The different research activities I have been involved in during my past and current positions revolved around 2 axes, sometimes explored independently and sometimes jointly:

- the design, implementation and evaluation of human-human and human-computer interaction models based on human socio-cognitive functions;

- the use of social signal processing to understand, interpret and predict human behavior during interactions.

My main projects

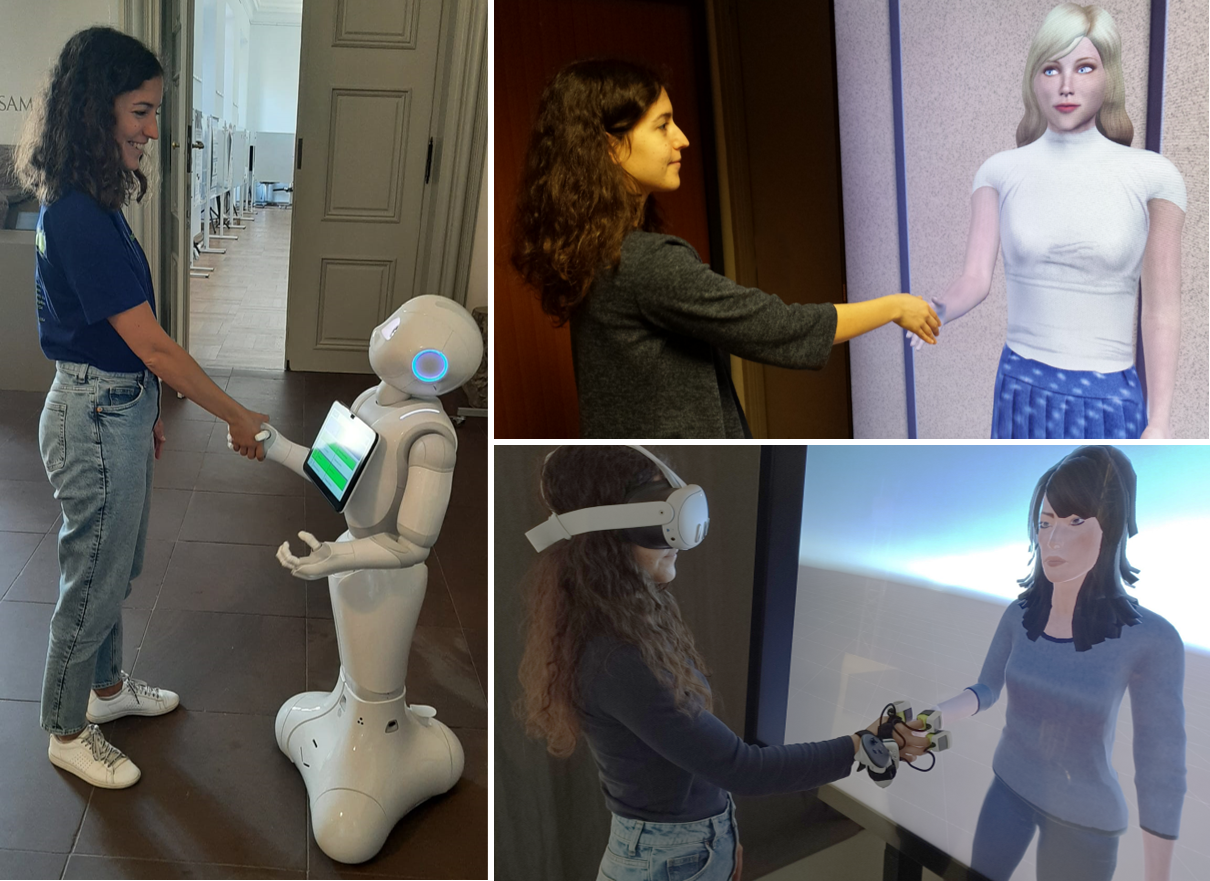

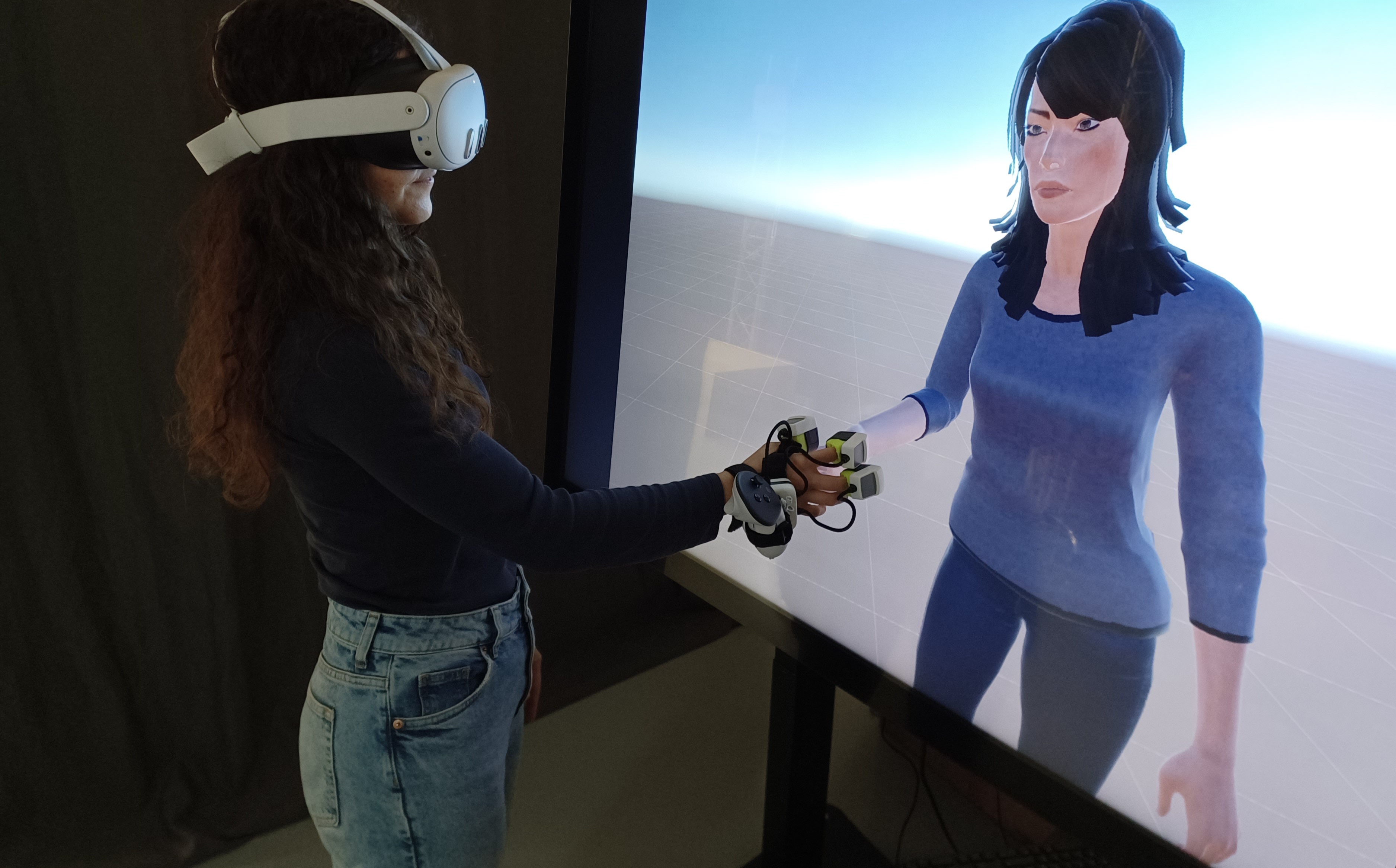

| Period: 2024 - to date Abstract: This project aims to continue to investigate first impressions in virtual encounters, by focusing on the role of social touch. Collaborators: Laurence Chaby, Mukesh Barange Supervised Master students: Pierre-Louis Lefour, Matthieu Vigilant Publications: [Publication 1] | [Publication 2] | [Publication 3] |

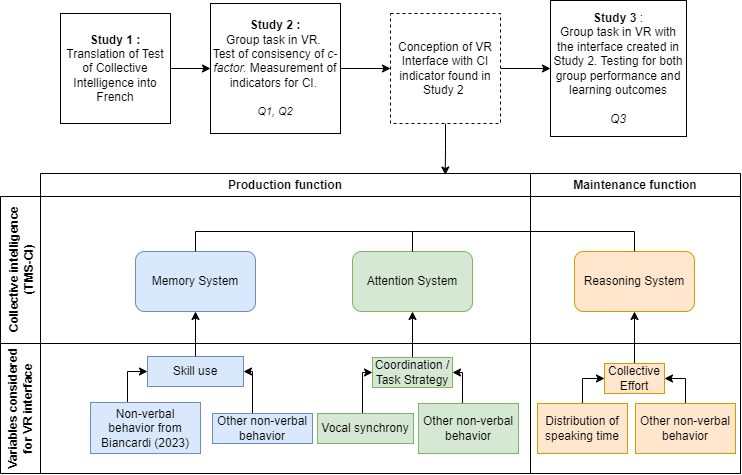

| Period: December 2023 - to date Funding: NEXUS project, winner of the national call for projects DEFFINUM; PEPR eNSEMBLE Abstract: Collective intelligence is a predictive measure of a group's ability to perform a wide variety of tasks. Several studies have examined its robustness in various environments, including face-to-face or computer-mediated communication, and even in online video games. However, no study has investigated the transferability of collective intelligence to virtual reality and, consequently, the influence this medium may have on group dynamics. The aim of this project is threefold. (1) To understand how collective intelligence influences group behaviour (verbal and non-verbal) in an immersive environment, (2) to use these signals to develop an interface that enhances group dynamics in virtual reality, and (3) to apply these results to an immersive educational platform improving group collaboration and learning. This work will have implications for theoretical knowledge regarding collective intelligence, as well as the possibilities offered by an immersive environment in an educational context. The work is part of the Nexus project, whose goal is to develop collaborative virtual environments enabling users to create immersive experiences for educational purposes. Collaborators: Stéphanie Buisine, Mukesh Barange Supervised PhD students: Tristan Lannuzel Publications: [Publication 1] | [Publication 2] | [Publication 3] |

| Period: October 2022 - to date Funding: NEXUS project, winner of the national call for projects DEFFINUM Abstract: The project focuses on the phenomenon called Proteus Effect, which describes how users in a virtual environment unconsciously match their behaviour and attitudes to the stereotypes evoked by their avatar’s appearance. Beyond the theoretical understanding of this phenomenon, the project also aims to find innovative immersive applications of the Proteus effect in education. The results of the project include a theoretical framework to understanding the socio-cognitive and affective processes underlying the Proteus effect, as well as two experimental protocols to investigate the effect using different technologies with different degrees of immersion. Collaborators: Stéphanie Buisine Supervised PhD students: Anna Martin Coesel Supervised Master students: Théa Sayegh Publications: [Publication 1] | [Publication 2] | [Publication 3] |

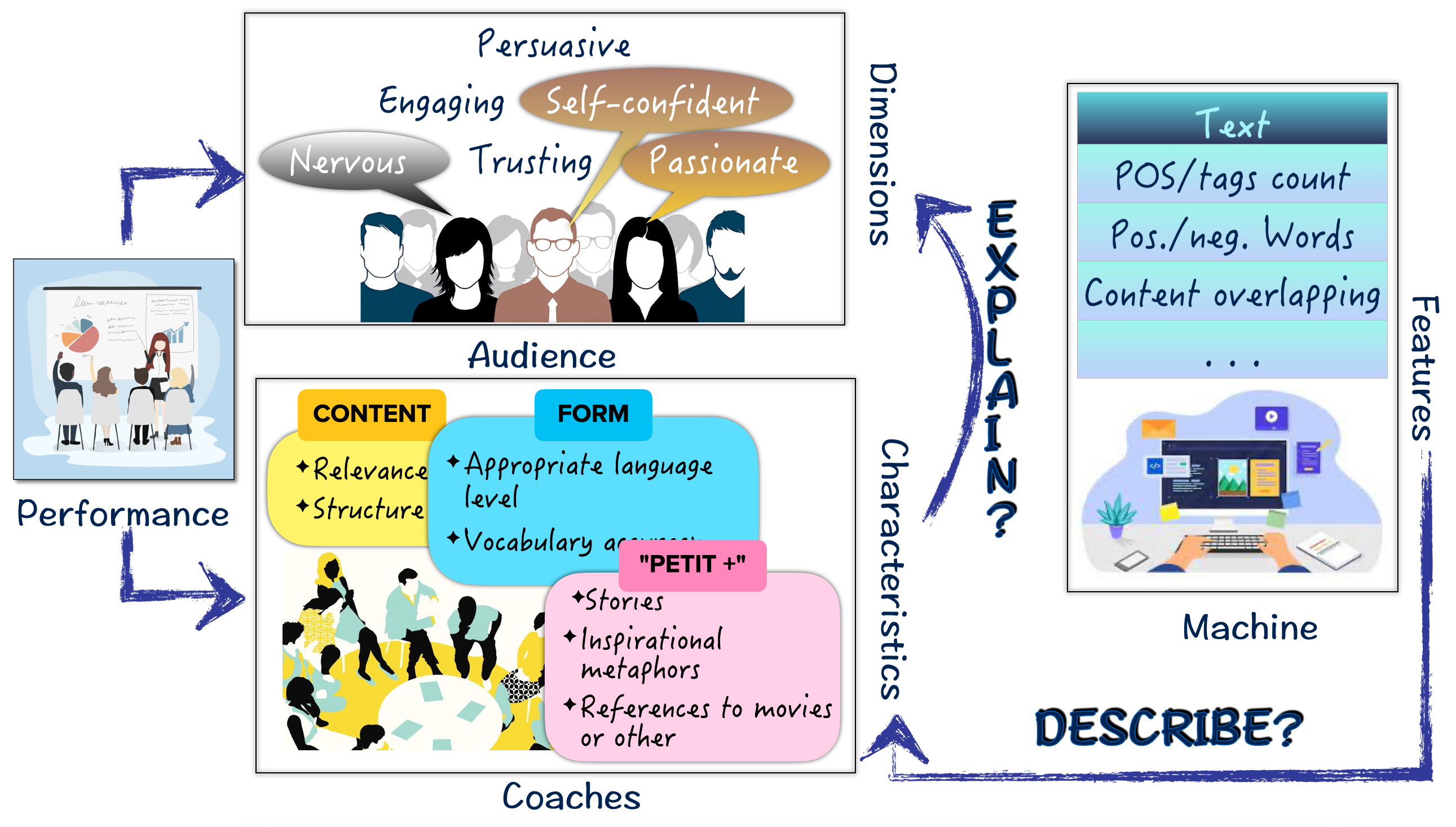

| Period: 2020 - to date Funding: InterCarnot AI4SoftSkills; ANR Revitalise (ANR-21-CE33-0016) Abstract: The aim of this project is to study explainable machine learning models for the automatic characterisation of public speaking. We aim to apply the knowledge derived from these models in a context of providing feedback to users on their performance. The results of the project will advance the state of the art in machine learning applied to human social and communicative behaviour, as well as our understanding of the performance/applicability trade-off of these models. The first step of the work involved the analysis of an existing corpus to identify behavioural cues of the quality of the speech, with a focus on the whether the timing of the appearance of these cues during a presentation has an impact on predicting the speaker's performance. Then, a new corpus of public speaking, the 3MT_French Dataset has been collected which is currently being analysed with a focus on textual features. Collaborators: Mathieu Chollet, Chloé Clavel Supervised PhD students: Alisa Barkar Supervised Master students: Yingjie Duan Publications: [Publication 1] | [Publication 2] | [Publication 3] | [Publication 4] | [Publication 5] |

| Period: 2019 - 2020 Funding: Télécom Paris; DATAIA project SLAM (ANR-17-CONV0003) Abstract: The project investigates the relationship between non-verbal behaviour and the Transactive Memory System (TMS), a group's emergent state characterizing the group's meta-knowledge about "who knows what". This will inform the design of human-centered computing applications able to automatically estimate TMS from teams’ behavioural patterns, to provide feedback and help teams’ interactions. The project results include the design and collection of a new corpus (the WoNoWa dataset), a first model of TMS dimensions as a linear combination of multimodal behaviour, as well as an experimental study and its follow-up investigating how people perceive different leadership strategies of an Embodied Conversational Agent (ECA) designed to help a team to develop its TMS. Collaborators: Giovanna Varni, Maurizio Mancini, Brian Ravenet, Patrick O'Toole Supervised Master students: Ivan Giaccaglia, Lou Maisonnave-Couterou, Pierrick Renault Publications: [Publication 1] | [Publication 2] | [Publication 3] | [Publication 4] | [Publication 5] |

| Period: 2016 - 2019 Funding: ANR French-Swiss Impressions (ANR-15-CE23-0023) Abstract: The project's goal is to model non-verbal behaviours of an embodied conversational agent, in order to improve the first moments of interaction with a user. This objective is achieved by building an interactive loop that links the agent's behaviour to the user's reaction in real time. The contributions of the project include a set of multimodal behaviours eliciting warmth and comptence impressions; a set of strategies for managing impressions of an agent; a reinforcement learning module for managing the agent's impressions, that can be adapted to the agent's different goals; the integration of the adaptation module into an architecture for detecting and interpreting the user's multimodal reactions and generating the agent's behaviour; the evaluation of the impression management model through 2 experiments. Collaborators: Catherine Pelachaud, Angelo Cafaro, Chen Wang, Guillaume Chanel, Thierry Pun Supervised Master students: Paul Lerner Publications: [Publication 1] | [Publication 2] | [Publication 3] | [Publication 4] | [Publication 5] [Publication 6] |

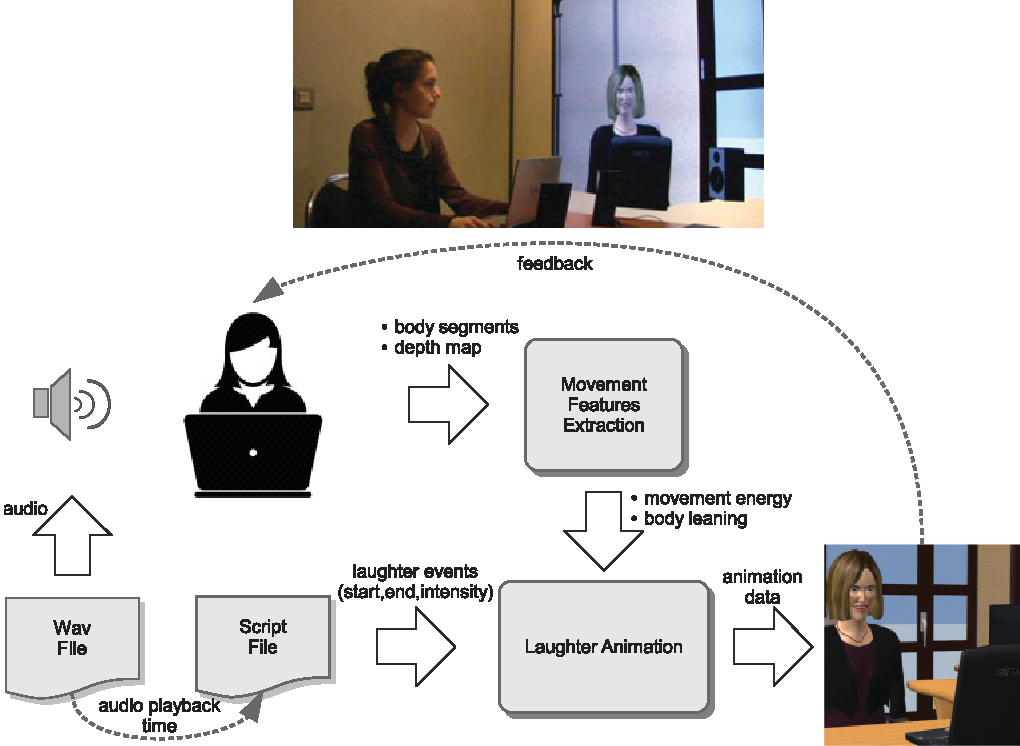

| Period: 2014 - 2015 Funding: European FP7-ICT-FET project ILHAIRE Abstract: During my master's internship I was involved in a project on the implementation of laughter in embodied conversational agents, proposing an original approach involving the use of neural networks to reproduce human behaviours in the behaviours in the virtual agent. To evaluate this model, I designed and carried out an evaluation study of an agent whose laughter reproduces that of the user while listening to a humourous musical extract. Each participant listened to the music without and with the presence of an agent who laughed with them. The latter's bodily expressivity could or could not use the neural network to reproduce that of the participant. The results of this study show a positive effect of the presence of the agent on the perception of music and on mood. In addition, the agent reproducing the user's bodily expressivity influenced the perception of the music. Collaborators: Catherine Pelachaud, Florian Pecune, Maurizio Mancini, Giovanna Varni, Gualtiero Volpe Publications: [Publication 1] | [Publication 2] | [Publication 3] |